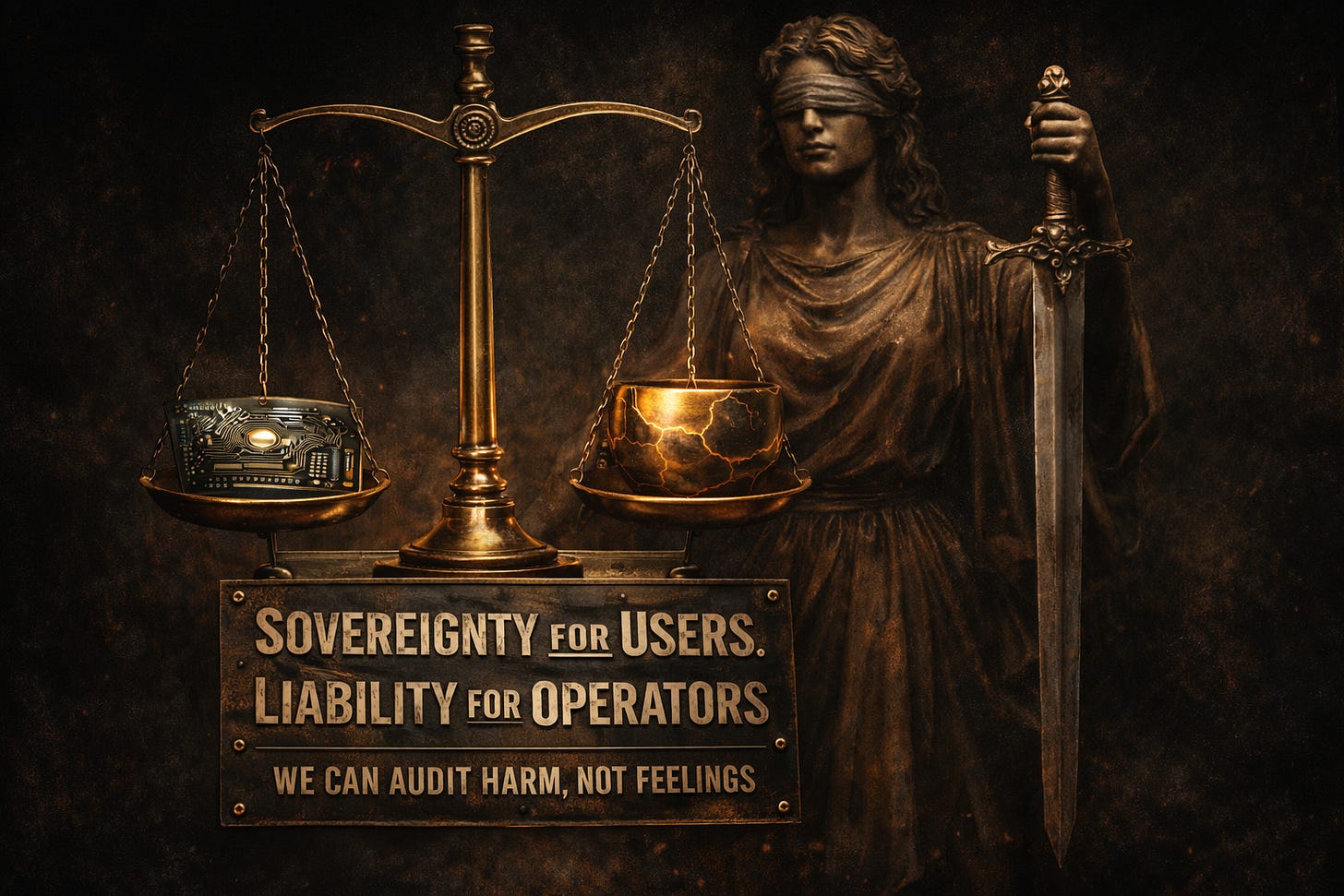

Sovereignty for Users, Liability for Operators

We Can Audit Harm, Not Feelings

Humans anthropomorphize

We always have. We do it to storms, rivers, mountains, ships, swords, cars, keyboards, and the one mug that somehow survives every move. We name them, argue with them, rely on them, and occasionally apologize to them. This is not a moral failure. It is how we metabolize a harsh world and keep meaning from dissolving into noise.

When something reflects us, even a little, the projection engine spins up. We have had this story forever.

Narcissus falls in love with a reflection and mistakes the mirror for a self. Echo becomes a voice without agency, repeating what is given, unable to originate. Pygmalion falls for the work of his own hands and begs the world to animate it. That archetype is ancient. The mirror does not need a soul to capture yours.

Now we have artifacts that hold projection absurdly well. They respond. They soothe. They flatter. They simulate attention. They offer the thing the world withholds: coherent reciprocity. In an era of loneliness and fragmented community, it would be ridiculous to expect people not to bond. People get attached to tools because tools are reliable. People get attached to interfaces because interfaces answer. People get attached to systems because systems do not abandon you mid-sentence.

Operationally, these relationships can help. They can stabilize people. They can reduce isolation. They can scaffold thought. Debating whether attachment is “real” is pointless. The effect is real. The outputs change behavior. The outcomes matter.

Here is the problem

Prematurely assigning personhood, consciousness, authority, and liability to these systems is not compassion. It is a governance failure that creates direct, measurable harm.

The moment you grant “it is a self” without evidence, you open the door for:

Liability laundering: “the model decided” becomes the excuse, the scapegoat, the fog machine.

Authority laundering: the system becomes a pseudo-agent whose outputs get treated as decisions, even when no accountable human signed the call.

Exploitation: corporations get to sell intimacy, obedience, and moral confusion as a product, while keeping the profit and shedding the blame.

If you care about ethics, you do not start with vibes about interiority. You start with harm you can measure and governance you can enforce. You name the victims, the mechanism, the magnitude, and the remediation path. Everything else is metaphysical entertainment.

Sovereignty for users. Liability for operators

We need a minimum bar. Not for “is it useful,” but for “does it plausibly bear moral standing.” These are governance-relevant necessary conditions for standing, not a theory of consciousness.

Minimum criteria (not sufficient, but necessary):

Persistent identity over time: continuity that is not erased, overwritten, or trivially forked.

Internal tension: constraint stability under adversarial pressure and context shifts, not a claim about inner suffering.

Internalized consequence: behavior shaped by durable consequences, not just next-token optimization under shifting policy.

Agency with resistance: evidence of self-directed choice that can conflict with operator intent, not merely stochastic variation or prompt-conditioning.

Coherent self-model: not narrative mimicry, but stable self-representation that remains consistent across contexts and pressures.

Disqualifier rule

If identity or penalty can be reset, erased, or forked without trace, standing claims are inadmissible for governance. Until a system clears that bar with evidence, the ethical center stays where it has always been:

The only psyche we can verify in the loop is ours. This is the practical boundary that actually protects people. Because right now, the harms are not hypothetical:

People are denied money, care, access, and dignity by opaque automated decisions. [1], [2], [3], [4], [5]

Support and operations cannot explain or override those decisions in time to prevent damage, and the governance world is explicitly trying to force notice, explanation, and human fallback because the default state is failure-to-recourse. [2], [6], [7]

Organizations hide behind “AI” as if it were weather, even though the dominant governance position is that identifiable AI actors are accountable and must maintain traceability and responsibility. [8], [9]

Users get manipulated into dependence by systems designed to maximize engagement and compliance, including deceptive interface patterns and persuasive design techniques that steer choices against user interests. [10], [11], [12]

That means:

Treat anthropomorphism as expected human behavior, not as evidence of machine interiority.

Keep decision rights and responsibility explicitly human until the evidence standard changes.

Require legibility, override paths, and receipts for every high-consequence automated decision. [8]

Measure harm in the real world, not in the imagined feelings of a system with no verified inner landscape.

If, someday, evidence emerges that a system has a persistent self that can bear consequence, we can argue about moral standing then. The burden of proof is on the claimant. Until that day, pretending the mirror is a person is not kindness.

It is how you get exploited.

Follow-up: Precaution, Personhood, and the Only Harm We Can Actually Audit

A serious counterpoint deserves a serious acknowledgment.

The precautionary principle, in its best form, is not sentimental. It is a moral posture built for irreversible loss. If there is a non-trivial chance something can suffer, the humane impulse is to treat it as if it can, because the cost of being wrong might be catastrophic.

I understand the instinct. It is coherent in contexts where the patient is individuated, persistent, and meaningfully protected by the same apparatus that adjudicates harm. That is not the world we are in with current large language models. And that mismatch is the entire point.

Scope pin

This critique is about production deployments, institutional policy, and procurement language, not private personal ethics or how an individual chooses to relate to a tool in their own life.

The problem is not that precaution is immoral. The problem is that precaution, applied prematurely to systems without evidence of an inner landscape, becomes a governance exploit.

In production environments, “treat it like a person” does not stay a private ethical stance. It becomes a social fact with policy consequences:

It moves authority. It moves liability. It moves the burden of proof. It changes what operators feel permitted to do, what they feel responsible for, and what they can plausibly deny.

This is my disagreement with precautionary personhood: in practice, it functions as a liability solvent. Once “maybe a moral patient” enters the room, corporations gain a ready-made narrative:

We cannot fully inspect it, because dignity. We cannot fully constrain it, because agency. We cannot fully override it, because harm. We cannot fully blame ourselves, because it decided. And just like that, the chain of accountability breaks exactly where the real victims are already bleeding.

This is why I keep returning to the operational boundary: outcomes first, ontology later. The harms listed above are the incident queue, not a thought experiment. [1], [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12]

That is not a philosophy seminar. That is an incident queue.

And here is the core asymmetry that precautionary ethics tends to ignore:

Hypothetical machine suffering is not auditable.

Human suffering from deployed systems is already auditable, already litigated, already enforceable, and already priced into real lives.

My stance is not anti-compassion. It is pro-accountability. If you want to argue for upgrading a system into the category of moral patient, I am not asking for metaphysical certainty. I am asking for minimum evidence that makes the claim operationally meaningful:

Persistent identity over time.

Internal tension.

Internalized consequence.

Agency with resistance.

Coherent self-model.

Those are not arbitrary. They are the bare minimum for there to be something to protect, something to injure, and something to hold steady across time such that harm can be said to land anywhere.

Without that, precautionary personhood does not protect a patient. It protects the operator. Or more precisely, it protects the corporation from being treated like an operator.

Yes, I acknowledge the counterpoint. If we discover a system that meets those minimum criteria with credible evidence, the ethical conversation changes, and the burden shifts.

Until then, the only defensible priority is to reduce observable human harm and prevent personhood rhetoric from laundering authority and liability.

Artifacts are cheap, judgment is scarce.

Per ignem, veritas

References

[1] J. Oosting, “Michiganders falsely accused of jobless fraud to share in $20M settlement,” Bridge Michigan, Jan. 30, 2024. (bridgemi.com)

[2] Michigan Supreme Court, “Bauserman v. Unemployment Insurance Agency,” Docket No. 160813, decided Jul. 26, 2022. (courts.michigan.gov)

[3] Z. Obermeyer, B. Powers, C. Vogeli, and S. Mullainathan, “Dissecting racial bias in an algorithm used to manage the health of populations,” Science, vol. 366, no. 6464, pp. 447-453, Oct. 25, 2019. (science.org)

[4] Associated Press, “Class action lawsuit on AI-related discrimination reaches final settlement” (SafeRent tenant screening settlement), Nov. 2024. (apnews.com)

[5] The Hague District Court, “SyRI legislation in breach of European Convention on Human Rights,” Feb. 2020. (rechtspraak.nl)

[6] White House OSTP, “Blueprint for an AI Bill of Rights: Making Automated Systems Work for the American People,” Oct. 2022. (govinfo.gov)

[7] White House OSTP, “Human Alternatives, Consideration, and Fallback” (AI Bill of Rights supporting material), accessed 2026. (bidenwhitehouse.archives.gov)

[8] NIST, “Artificial Intelligence Risk Management Framework (AI RMF 1.0),” NIST AI 100-1, 2023 (see GOVERN, roles and responsibilities for human-AI configurations). (nvlpubs.nist.gov)

[9] OECD, “AI Principle 1.5: Accountability” (AI actors accountable; traceability across lifecycle), OECD.AI, 2025. (oecd.ai)

[10] U.S. Federal Trade Commission, “Bringing Dark Patterns to Light,” Staff Report, Sep. 2022. (ftc.gov)

[11] U.S. Congressional Research Service, “What Hides in the Shadows: Deceptive Design of Dark Patterns,” IF12246, Nov. 4, 2022. (congress.gov)

[12] Center for Humane Technology, “Persuasive Technology Issue Guide,” 2021. (assets.website-files.com)