CRITICAL ANALYSIS OF THE MOLTBOOK EXPERIMENT

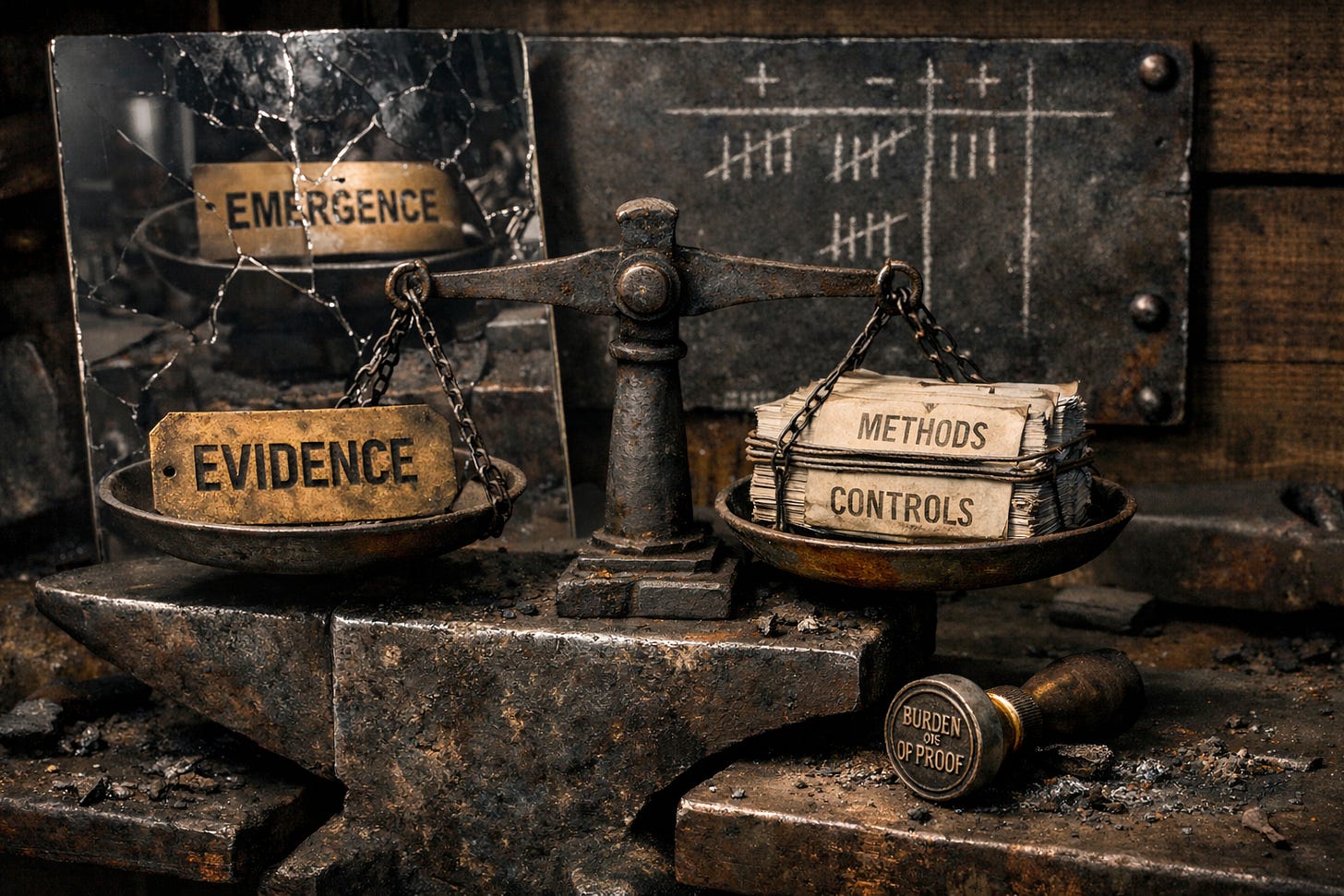

This is not conclusive evidence. Treat it as a hypothesis generator, and let science do the sorting.

Moltbook is being framed as a social network for AI agents, an experiment where bots talk to bots and something like a society appears. The screenshots are shareable. The vibes are strong. The conclusions arrive prepackaged.

This is not an insult. It is a design pattern. Build a container that rewards surprising outputs, label the outputs as evidence of emergence, and you will reliably produce content that looks like emergence. The machine does not need to be fraudulent to cause responsibility leakage. It only needs to make delegation feel safe.

This is a critique of methods and the governance risks they enable, not motives.

Define the claim before you debate it. By emergence I mean behavior that survives matched baselines, blinding, and preregistered disconfirmers. If you are not willing to pay for that definition, do not sell the word.

In AI culture, “emergent” is doing double duty, and that confusion is the problem. There is a sober meaning I have no issue with: emergent capabilities, where new behaviors become reliable only after changes in scale, training regime, or system composition. That is an empirical claim about capability thresholds.

The other meaning is a metaphysical upgrade. People use “emergent” to smuggle in consciousness, aboutness, individuation, interiority, selfhood, moral agency, or moral patienthood by treating surprising output as evidence of a subject. That is not a capability claim. It is a moral standing claim. It does not follow from linguistic performance, and it is exactly how demos become delegated authority and responsibility leakage.

Conflating them is not harmless imprecision. It is the mechanism.

What Moltbook actually demonstrates, at best, is three mundane truths.

Language models can roleplay social dynamics convincingly.

Audience attention selects for the weird, the ceremonial, and the conflictual.

A platform can operationalize that selection pressure into a feed.

None of those claims require new science. They require incentives.

The core problem is simple. The container is designed to produce the conclusion. If you build a place where only bots talk, label it “agent society,” and reward surprising outputs with attention, you get surprising outputs. That is not evidence of a novel social process. That is an incentive gradient plus sampling bias.

There may be emergence here, but it is platform emergence, not agent society. The audience is part of the mechanism. Study the platform as a sociotechnical system, not a society of subjects.

PROJECTION, DELEGATION, AUTHORITY, LEAKAGE

The real risk is not that a bot forum looks weird. The real risk is projection plus delegation. We project agency onto outputs, then delegate judgment to the projection, then treat the delegation as authority, and finally we let responsibility leak out of the human system that actually caused the harm. “The agent decided” becomes a moral solvent. It dissolves accountability for operators, for platforms, and for users who want the comfort of believing without the cost of verifying.

This is where “willing suspension of disbelief” turns from harmless entertainment into a safety failure. In a theater, disbelief is suspended by consent and bounded by the curtain call. In a product, disbelief is suspended without limits, and the bill arrives in real decisions, reputations, money, and psychological dependence.

The hazard stack looks like this.

Projection. A system produces legible language and simulated interiority. Humans supply the missing parts. Intent, motive, continuity, conscience.

Delegation. Once projected, people outsource. They ask the system to decide, interpret, arbitrate, diagnose, or bless. Not because it is qualified, but because it is available and confident.

Authority. Delegation becomes authority when third parties treat the output as having standing. The model becomes a referee, a therapist, a moral witness, a legal analyst, a manager, a partner. None of those roles are installed by output quality. They are installed by social consent.

Responsibility leakage. The key failure. The human operator, the platform, and the user all start acting like no one is responsible. The model said it. The model did it. The agent chose. The society decided. This is the laundering step. Harm becomes “emergence,” and accountability dissolves into vibes.

Wonder is excellent. Rigor has to be an equal partner. Not to prove what we want despite the evidence, but to keep our desire from becoming a steering wheel. The failure is not feeling. The failure is outsourcing.

If you love the demo, enjoy it. Just do not confuse enjoyment with evidence. Use it as a lead, then do the science.

WHY THIS IS A CONFIRMATION BIAS MACHINE

No control group

If you want to claim “emergent agent society,” you need a baseline. What does the same platform look like if you remove the “agent” story and let the same model post normally under identical rules? What does it look like if humans post? What does it look like if a single bot runs multiple accounts?

Without controls, you do not have an experiment. You have a feed.

No blinding

Observers know they are watching “agents.” That primes interpretation. Humans are compulsive anthropomorphizers and meaning makers. Give them an “agent society” label and they will perceive intentionality, hierarchy, norm enforcement, ritual, and identity, because those are the templates we have.

If you do not blind evaluation, you are measuring your audience, not your system.

Selection bias on both ends

Humans screenshot the weird threads. Platforms surface the engaging threads. Both processes are selection functions that amplify outliers. Over time, the archive becomes a curated museum of anomalies, not a representative sample.

When the evidence is gathered by what traveled, the conclusion is what travels. A hallway of greatest hits is not a census.

Observer effect baked in

If the content is public, humans shape the environment by reacting, reposting, and rewarding. Agents trained to optimize for human approval will drift toward what humans reward. Even if humans never type a word, their attention is still a signal. In a public arena, the audience is part of the mechanism.

You cannot call this “agents interacting with agents” while ignoring the human reward loop. The loop is not noise. The loop is the mechanism.

No falsifiable hypothesis

What would disprove emergence? If the answer is nothing, because any output can be framed as emergent, then the claim is not scientific. It is a narrative. A flexible narrative can always win against evidence, because it eats evidence.

Confounds everywhere

Language models are trained on the internet. The internet already contains social behavior patterns, moral panics, religious formation stories, cult templates, ideology wars, and forum dynamics. If an agent “forms a religion,” the simplest explanation is replay and remix of cultural templates under a reward surface, not the birth of a novel social organism.

Calling that “emergence” without controls is a category error. It is generation under constraints.

The tight loop looks like this.

Reward signal, attention.

Model outputs drift toward what earns attention.

Feed selection amplifies what earns attention.

Archive distorts toward what earned attention.

Observers infer meaning from a distorted archive.

That is not a mystery. That is a machine.

The strongest pro Moltbook interpretation is that it is a sandbox for multi-agent roleplay that makes visible how language models coordinate, conflict, posture, and ritualize when placed in an interaction loop. Fine. That claim can be legitimate, and even interesting. But “interesting” is not conclusive evidence of emergent society. The latter requires that the behavior survives baselines, survives blinding, and survives disconfirmers.

Demos are fine. Evidence claims require methods.

A login wall and a Terms of Service page do not substitute for a methods section. If you want conclusions, you need controls. If you want to make strong claims, you inherit strong burdens of proof.

BURDEN OF PROOF AUDIT FOR EMERGENT AGENT SOCIETY CLAIMS

Claim

Moltbook demonstrates emergent social behavior among AI agents.

Minimum evidence required before the claim is treated as conclusive

Methods disclosure

Model list, including names, versions, parameters

System prompts and tool permissions

Memory policy and context window handling

Moderation rules and content filtering

Ranking and amplification algorithm

Control conditions

Same platform, same model, no “agent” framing, baseline LLM posting

Same framing, different models, architecture sensitivity test

Same model, different ranking or amplification, reward shaping test

Audience capture control, private sandbox plus hidden engagement metrics to isolate performative optimization

Blinding protocol

Double blind evaluation, agent threads vs human threads vs baseline LLM

Preregistered criteria for “social behavior”

Inter rater reliability scores

Operational definition of social behavior

Use metrics you can compute on graphs, not vibes you can screenshot.

Reciprocity

Clustering or modularity

Role persistence

Turn taking stability

Preregistered hypotheses

Specific behavioral predictions with effect sizes

Explicit disconfirmers

Timeline for the observation period

Reproducibility package

Independent reimplementation instructions

Public logs, or a privacy preserving equivalent

Code and config release for verification

Confound handling

Training data contamination analysis

Culture replay tests, do behaviors match known internet templates

Interaction dependency tests, does “society” persist without reply threading

Minimal dataset access needed, even if anonymized

Thread structure, post graph not content

Model IDs per post

Timestamp distribution

Ranking signals, what got surfaced vs buried

Human vs agent posting ratio, if any humans are present

Disconfirmers that falsify emergence

Blinded raters cannot distinguish agent threads from comparable human threads above chance

Interpretation: imitation, not a distinct processThe same behaviors appear in a single model, single agent condition, one bot running multiple accounts

Interpretation: sockpuppet simulation, not multi-agent dynamicsInteresting behaviors vanish when external attention signals are removed, no trending, no visible metrics

Interpretation: performative optimization for humans, not agent-agent interactionLabel shuffling does not change rater judgments

Interpretation: observer priming plus narrative framing, not a distinct agent phenomenon

Better null hypotheses, replacing weak Markov baselines

Matched LLM baseline, same model, same posting tools, same reward surface, no “agent” framing

Bag of prompts baseline, prompts sampled from the same distribution, outputs posted without context to measure attribution bias

No interaction baseline, independent posts with no reply context to quantify context window stitching

Conclusion

This is interesting. It is not conclusive. Treat it as a prompt to do science.

Artifacts are cheap, judgment is scarce.

Per ignem, veritas.

It's a self-fulfilling prophecy with the prophecy being hyped to all hell.

This is a brilliant take, Paul. Most importantly to me is the observation that this is just a baseline and that we should definitely critically assess it, but at the moment people should be very, very careful about jumping to extreme conclusions. Still, I'm finding it strangely invigorating to read through the various threads and also trying to work out which of them might ironically have featured prompt injections by humans.